Portenta Vision Shield User Manual

Learn about the hardware and software features of the Arduino® Portenta Vision Shield.

Overview

This user manual will guide you through a practical journey covering the most interesting features of the Arduino Portenta Vision Shield. With this user manual, you will learn how to set up, configure and use this Arduino board.

Hardware and Software Requirements

Hardware Requirements

- Portenta Vision Shield Ethernet (x1) or Portenta Vision Shield LoRa®

- Portenta H7 (x1) or Portenta C33 (x1)

- USB-C® cable cable (x1)

Software Requirements

- OpenMV IDE

- Arduino IDE 1.8.10+, Arduino IDE 2.0+, or Arduino Web Editor

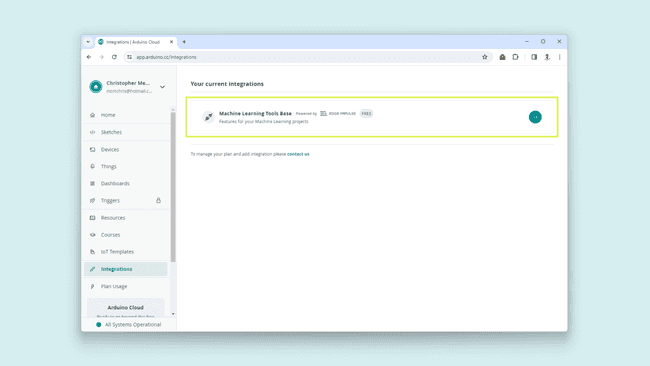

- To create custom Machine Learning models, the Machine Learning Tools add-on integrated into the Arduino Cloud is needed. In case you do not have an Arduino Cloud account, you will need to create one first.

Product Overview

The Arduino Portenta Vision Shield is an add-on board providing machine vision capabilities and additional connectivity to the Portenta family of Arduino boards, designed to meet the needs of industrial automation. The Portenta Vision Shield connects via a high-density connector to the Portenta boards with minimal hardware and software setup.

The included HM-01B0 camera module has been pre-configured to work with the OpenMV libraries provided by Arduino. Based on the specific application requirements, the Portenta Vision Shield is available in two configurations with either Ethernet or LoRa® connectivity.

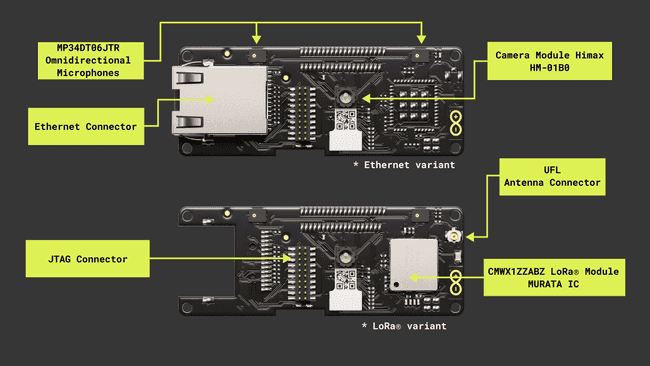

Board Architecture Overview

The Portenta Vision Shield LoRa® brings industry-rated features to your Portenta. This hardware add-on will let you run embedded computer vision applications, connect wirelessly via LoRa® to the Arduino Cloud or your own infrastructure, and activate your system upon the detection of sound events.

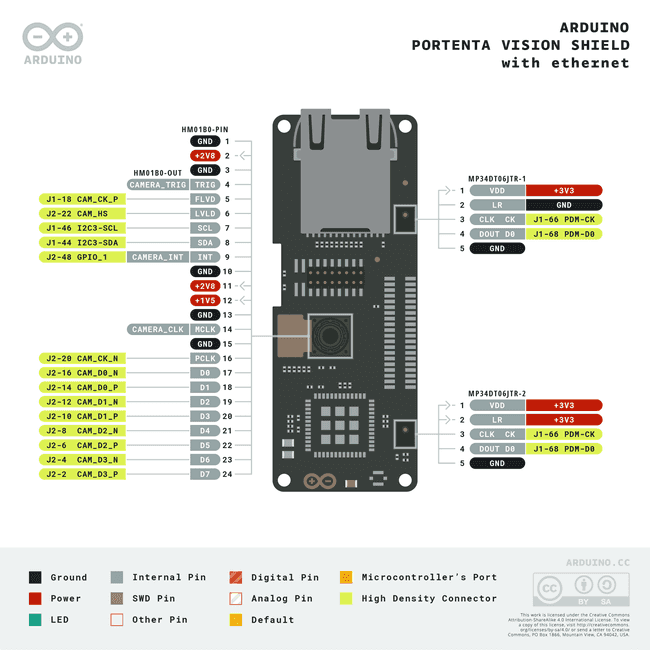

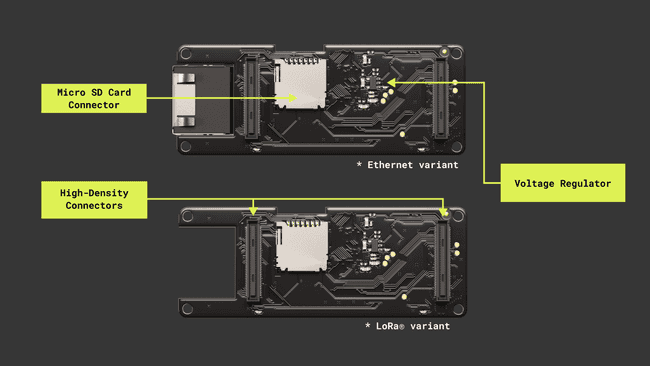

Here is an overview of the board's main components, as shown in the images above:

Power Regulator: the Portenta H7/C33 supplies 3.3 V power to the LoRa® module (ASX00026 only), Ethernet communication (ASX00021 only), Micro SD slot and dual microphones via the 3.3 V output of the high-density connectors. An onboard LDO regulator supplies a 2.8 V output (300 mA) for the camera module.

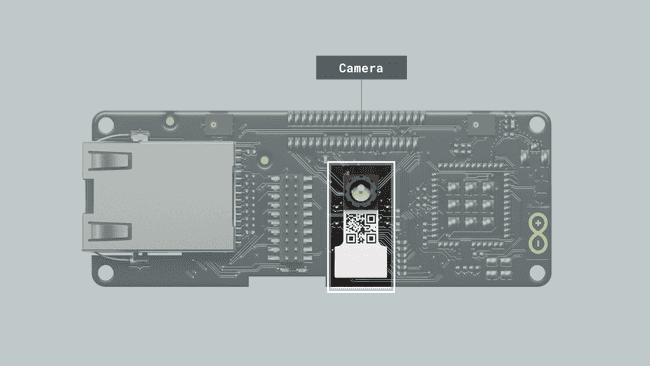

Camera: the Himax HM-01B0 Module is a very low-power camera with 320x320 resolution and a maximum of 60 FPS depending on the operating mode. Video data is transferred over a configurable 8-bit interconnect with support for frame and line synchronization. The module delivered with the Portenta Vision Shield is the monochrome version. Configuration is achieved via an I2C connection with the compatible Portenta boards microcontrollers.

HM-01B0 offers very low-power image acquisition and provides the possibility to perform motion detection without main processor interaction. The“Always-on” operation provides the ability to turn on the main processor when movement is detected with minimal power consumption.

The Portenta C33 is not compatible with the camera of the Portenta Vision Shield

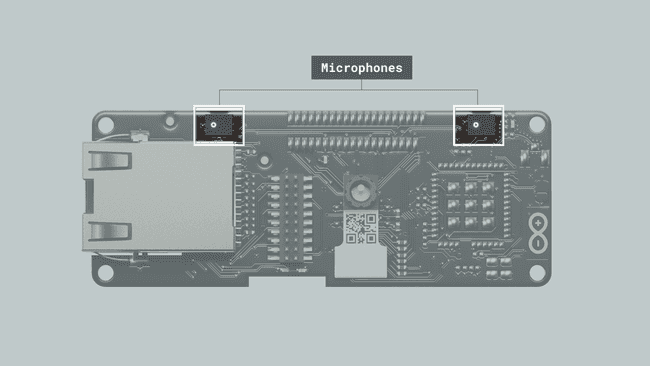

Digital Microphones: the dual MP34DT05 digital MEMS microphones are omnidirectional and operate via a capacitive sensing element with a high (64 dB) signal-to-noise ratio. The microphones have been configured to provide separate left and right audio over a single PDM stream.

The sensing element, capable of detecting acoustic waves, is manufactured using a specialized silicon micromachining process dedicated to produce audio sensors.

Micro SD Card Slot: a Micro SD card slot is available under the Portenta Vision Shield board. Available libraries allow reading and writing to FAT16/32 formatted cards

Ethernet (ASX00021 Only): ethernet connector allows connecting to 10/100 Base TX networks using the Ethernet PHY available on the Portenta board.

LoRa® Module (ASX00026 Only): LoRa® connectivity is provided by the Murata CMWX1ZZABZ module. This module contains an STM32L0 processor along with a Semtech SX1276 Radio. The processor is running on Arduino open-source firmware based on Semtech code.

Shield Environment Setup

Connect the Vision Shield with a Portenta H7 through their High-Density connectors and verify they are correctly aligned.

OpenMV IDE Setup

Before you can start programming MicroPython scripts for the Vision Shield, you need to download and install the OpenMV IDE.

Open the OpenMV download page in your browser, download the latest version available for your operating system, and follow the instructions of the installer.

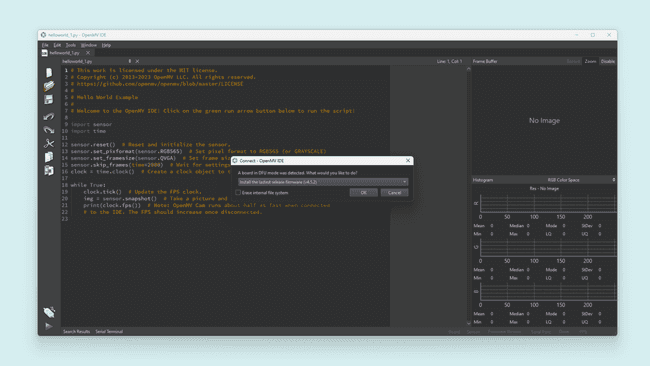

Open the OpenMV IDE and connect the Portenta H7 to your computer via the USB cable if you have not done so yet.

Click on the "connect" symbol at the bottom of the left toolbar.

If your Portenta H7 does not have the latest firmware, a pop-up will ask you to install it. Your board will enter in DFU mode and its green LED will start fading.

Select

Install the latest release firmwareOK

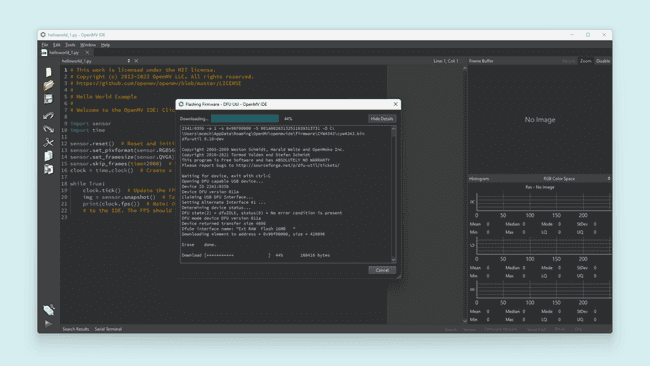

Portenta H7's green LED will start flashing while the OpenMV firmware is being uploaded to the board. A loading bar will start showing you the flashing progress.

Wait until the green LED stops flashing and fading. You will see a message saying

DFU firmware update complete!

The board will start flashing its blue LED when it is ready to be connected. After confirming the completion dialog, the Portenta H7 should already be connected to the OpenMV IDE, otherwise, click the "connect" button (plug symbol) once again (the blue blinking should stop).

While using the Portenta H7 with OpenMV, the RGB LED of the board can be used to inform the user about its current status. Some of the most important ones are the following:

🟢 Blinking Green: Your Portenta H7 onboard bootloader is running. The onboard bootloader runs for a few seconds when your H7 is powered via USB to allow OpenMV IDE to reprogram your Portenta.

🔵 Blinking Blue: Your Portenta H7 is running the default main.py script onboard.

If you overwrite the main.py script on your Portenta H7, then it will run whatever code you loaded on it instead.

If the LED is blinking blue but OpenMV IDE cannot connect to your Portenta H7, please make sure you are connecting your Portenta H7 to your PC with a USB cable that supplies both data and power.

⚪ Blinking White: Your Portenta H7 firmware is panicking because of a hardware failure. Please check that your Vision Shield's camera module is installed securely.

If you tap the Portenta H7 reset button once, the board resets. If you tap it twice, the board enters Device Firmware Upgrade (DFU) mode and its green LED starts blinking and fading.

Pinout

The full pinout is available and downloadable as PDF from the link below:

Datasheet

The complete datasheet is available and downloadable as PDF from the link below:

Schematics

The complete schematics are available and downloadable as PDF from the links below:

STEP Files

The complete STEP files are available and downloadable from the link below:

First Use

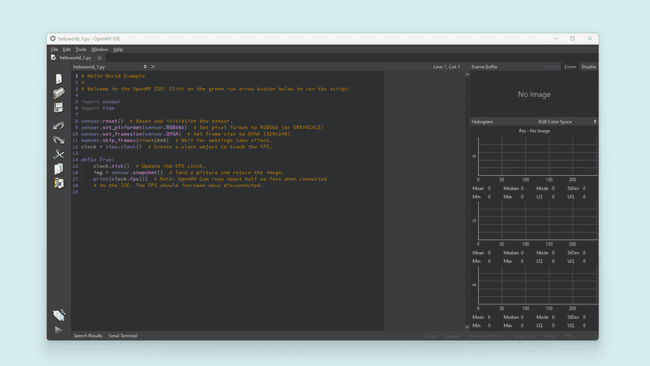

Hello World Example

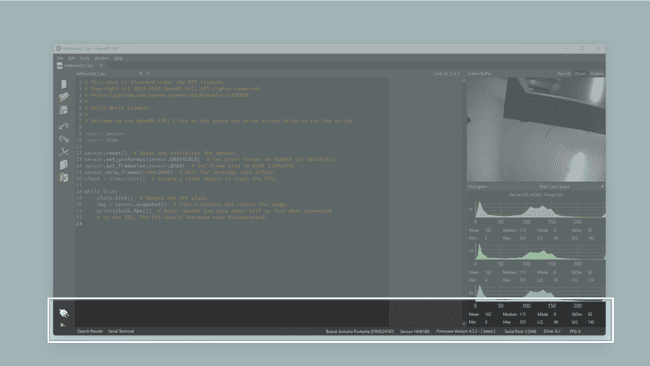

Working with camera modules, the

Hello WorldThe following example script can be found on File > Examples > HelloWorld > helloworld.py in the OpenMV IDE.

1import sensor2import time3

4sensor.reset() # Reset and initialize the sensor.5sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)6sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)7sensor.skip_frames(time=2000) # Wait for settings take effect.8clock = time.clock() # Create a clock object to track the FPS.9

10while True:11 clock.tick() # Update the FPS clock.12 img = sensor.snapshot() # Take a picture and return the image.13 print(clock.fps()) # Note: OpenMV Cam runs about half as fast when connected14 # to the IDE. The FPS should increase once disconnected.

From the above example script, we can highlight the main functions:

lets you set the pixel format for the camera sensor. The Vision Shield is compatible with these:sensor.set_pixformat(<Sensor>)

, andsensor.GRAYSCALE

.sensor.BAYERTo define the pixel format to any of the supported ones, just add it to the

function argument.set_pixformat

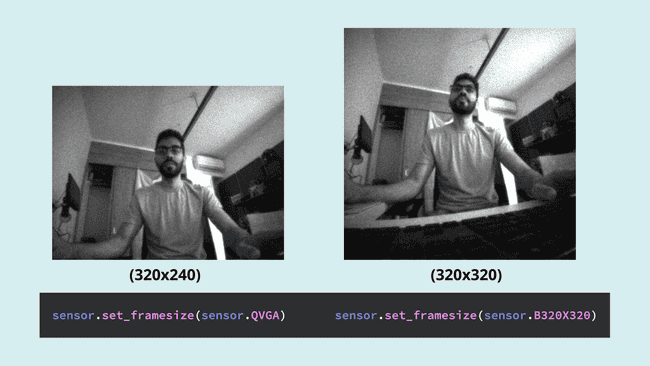

lets you define the image frame size in terms of pixels. Here you can find all the different options.sensor.set_framesize(<Resolution>)To leverage full sensor resolution with the Vision Shield camera module

, useHM01B0

.sensor.B320X320

lets you take a picture and return the image so you can save it, stream it or process it.sensor.snapshot()

Camera

The Portenta Vision Shields's main feature is its onboard camera, based on the HM01B0 ultralow power CMOS image sensor. It is perfect for Machine Learning applications such as object detection, image classification, machine/computer vision, robotics, IoT, and more.

Main Camera Features

- Ultra-Low-Power Image Sensor designed for always-on vision devices and applications

- High-sensitivity 3.6 μ BrightSenseTM pixel technology Window, vertical flip and horizontal mirror readout

- Programmable black level calibration target, frame size, frame rate, exposure, analog gain (up to 8x) and digital gain (up to 4x)

- Automatic exposure and gain control loop with support for 50 Hz / 60 Hz flicker avoidance

- Motion Detection circuit with programmable ROI and detection threshold with digital output to serve as an interrupt

Supported Resolutions

- QQVGA (160x120) at 15, 30, 60 and 120 FPS

- QVGA (320x240) at 15, 30 and 60 FPS

- B320X320 (320x320) at 15, 30 and 45 FPS

Power Consumption

- < 1.1 mW QQVGA resolution at 30 FPS,

- < 2 mW QVGA resolution at 30 FPS

- < 4 mW QVGA resolution at 60 FPS

The Vision Shield is primarily intended to be used with the OpenMV MicroPython ecosystem. So, it's recommended to use this IDE for machine vision applications.

Snapshot Example

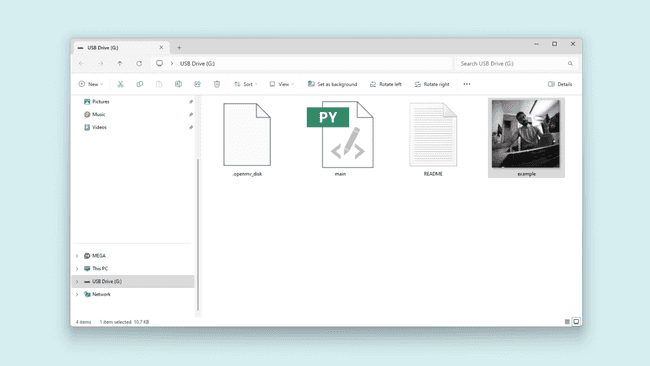

The example code below lets you take a picture and save it on the Portenta H7 local storage or in a Micro SD card as

example.jpg1import sensor2import time3import machine4

5sensor.reset() # Reset and initialize the sensor.6sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)7sensor.set_framesize(sensor.B320X320) # Set frame size to QVGA (320x240)8sensor.skip_frames(time=2000) # Wait for settings take effect.9

10led = machine.LED("LED_BLUE")11

12start = time.ticks_ms()13while time.ticks_diff(time.ticks_ms(), start) < 3000:14 sensor.snapshot()15 led.toggle()16

17led.off()18

19img = sensor.snapshot()20img.save("example.jpg") # or "example.bmp" (or others)21

22raise (Exception("Please reset the camera to see the new file."))If a Micro SD card is inserted into the Vision Shield, the snapshot will be stored there

After the snapshot is taken, reset the board by pressing the reset button and the image will be on the board storage drive.

Video Recording Example

The example code below lets you record a video and save it on the Portenta H7 local storage or in a Micro SD card as

example.mjpeg1import sensor2import time3import mjpeg4import machine5

6sensor.reset() # Reset and initialize the sensor.7sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)8sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)9sensor.skip_frames(time=2000) # Wait for settings take effect.10

11led = machine.LED("LED_RED")12

13led.on()14m = mjpeg.Mjpeg("example.mjpeg")15

16clock = time.clock() # Create a clock object to track the FPS.17for i in range(50):18 clock.tick()19 m.add_frame(sensor.snapshot())20 print(clock.fps())21 22m.close()23led.off()24

25raise (Exception("Please reset the camera to see the new file."))We recommend you use VLC to play the video.

Sensor Control

There are several functions that allow us to configure the behavior of the camera sensor and adapt it to our needs.

Gain: the gain is related to the sensor sensitivity and affects how bright or dark the final image will be.

With the following functions, you can control the camera gain:

1sensor.set_auto_gain(True, gain_db_ceiling=16.0) # True = auto gain enabled, with a max limited to gain_db_ceiling parameter.2sensor.set_auto_gain(False, gain_db=8.0) # False = auto gain disabled, fixed to gain_db parameter.

Orientation: flip the image captured to meet your application's needs.

With the following functions, you can control the image orientation:

1sensor.set_hmirror(True) # Enable horizontal mirror | undo the mirror if False2sensor.set_vflip(True) # Enable the vertical flip | undo the flip if FalseYou can find complete

Sensor ControlBar and QR Codes

The Vision Shield is ideal for production line inspections, in these examples, we are going to be locating and reading bar codes and QR codes.

Bar Codes

This example code can be found in File > Examples > Barcodes in the OpenMV IDE.

1import sensor2import image3import time4import math5

6sensor.reset()7sensor.set_pixformat(sensor.GRAYSCALE)8sensor.set_framesize(sensor.QVGA) # High Res!9sensor.set_windowing((640, 80)) # V Res of 80 == less work (40 for 2X the speed).10sensor.skip_frames(time=2000)11sensor.set_auto_gain(False) # must turn this off to prevent image washout...12sensor.set_auto_whitebal(False) # must turn this off to prevent image washout...13clock = time.clock()14

15# Barcode detection can run at the full 640x480 resolution of your OpenMV Cam's.16

17def barcode_name(code):18 if code.type() == image.EAN2:19 return "EAN2"20 if code.type() == image.EAN5:21 return "EAN5"22 if code.type() == image.EAN8:23 return "EAN8"24 if code.type() == image.UPCE:25 return "UPCE"26 if code.type() == image.ISBN10:27 return "ISBN10"28 if code.type() == image.UPCA:29 return "UPCA"30 if code.type() == image.EAN13:31 return "EAN13"32 if code.type() == image.ISBN13:33 return "ISBN13"34 if code.type() == image.I25:35 return "I25"36 if code.type() == image.DATABAR:37 return "DATABAR"38 if code.type() == image.DATABAR_EXP:39 return "DATABAR_EXP"40 if code.type() == image.CODABAR:41 return "CODABAR"42 if code.type() == image.CODE39:43 return "CODE39"44 if code.type() == image.PDF417:45 return "PDF417"46 if code.type() == image.CODE93:47 return "CODE93"48 if code.type() == image.CODE128:49 return "CODE128"50

51

52while True:53 clock.tick()54 img = sensor.snapshot()55 codes = img.find_barcodes()56 for code in codes:57 img.draw_rectangle(code.rect())58 print_args = (59 barcode_name(code),60 code.payload(),61 (180 * code.rotation()) / math.pi,62 code.quality(),63 clock.fps(),64 )65 print(66 'Barcode %s, Payload "%s", rotation %f (degrees), quality %d, FPS %f'67 % print_args68 )69 if not codes:70 print("FPS %f" % clock.fps())The format, payload, orientation and quality will be printed out in the Serial Monitor when a bar code becomes readable.

QR Codes

This example code can be found in File > Examples > Barcodes in the OpenMV IDE.

1import sensor2import time3

4sensor.reset()5sensor.set_pixformat(sensor.GRAYSCALE)6sensor.set_framesize(sensor.B320X320)7sensor.skip_frames(time=2000)8sensor.set_auto_gain(False) # must turn this off to prevent image washout...9clock = time.clock()10

11while True:12 clock.tick()13 img = sensor.snapshot()14 img.lens_corr(1.8) # strength of 1.8 is good for the 2.8mm lens.15 for code in img.find_qrcodes():16 img.draw_rectangle(code.rect(), color=(255, 255, 0))17 print(code)18 print(clock.fps())The coordinates, size, and payload will be printed out in the Serial Monitor when a QR code becomes readable.

Face Tracking

You can track faces using the built-in FOMO face detection model. This example can be found in File > Examples > Machine Learning > TensorFlow > tf_object_detection.py.

This script will draw a circle on each detected face and will print their coordinates in the Serial Monitor.

1import sensor2import time3import tf4import math5

6sensor.reset() # Reset and initialize the sensor.7sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)8sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)9sensor.set_windowing((240, 240)) # Set 240x240 window.10sensor.skip_frames(time=2000) # Let the camera adjust.11

12min_confidence = 0.413

14# Load built-in FOMO face detection model15labels, net = tf.load_builtin_model("fomo_face_detection")16

17# Alternatively, models can be loaded from the filesystem storage.18# net = tf.load('<object_detection_network>', load_to_fb=True)19# labels = [line.rstrip('\n') for line in open("labels.txt")]20

21colors = [ # Add more colors if you are detecting more than 7 types of classes at once.22 (255, 0, 0),23 (0, 255, 0),24 (255, 255, 0),25 (0, 0, 255),26 (255, 0, 255),27 (0, 255, 255),28 (255, 255, 255),29]30

31clock = time.clock()32while True:33 clock.tick()34

35 img = sensor.snapshot()36

37 # detect() returns all objects found in the image (split out per class already)38 # we skip class index 0, as that is the background, and then draw circles of the center39 # of our objects40

41 for i, detection_list in enumerate(42 net.detect(img, thresholds=[(math.ceil(min_confidence * 255), 255)])43 ):44 if i == 0:45 continue # background class46 if len(detection_list) == 0:47 continue # no detections for this class?48

49 print("********** %s **********" % labels[i])50 for d in detection_list:51 [x, y, w, h] = d.rect()52 center_x = math.floor(x + (w / 2))53 center_y = math.floor(y + (h / 2))54 print(f"x {center_x}\ty {center_y}")55 img.draw_circle((center_x, center_y, 12), color=colors[i], thickness=2)56

57 print(clock.fps(), "fps", end="\n")

You can load different Machine Learning models for detecting other objects, for example, persons.

Download the

.tflite.txtUse the following example script to run the person detection model.

1import sensor2import time3import tf4import math5import uos, gc6

7sensor.reset() # Reset and initialize the sensor.8sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to RGB565 (or GRAYSCALE)9sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)10sensor.set_windowing((240, 240)) # Set 240x240 window.11sensor.skip_frames(time=2000) # Let the camera adjust.12

13net = tf.load('person_detection.tflite', load_to_fb=True)14labels = [line.rstrip('\n') for line in open("person_detection.txt")]15

16

17clock = time.clock()18while True:19 clock.tick()20

21 img = sensor.snapshot()22

23 for obj in net.classify(img, min_scale = 1.0, scale_mul= 0.8, x_overlap = 0.5, y_overlap = 0.5):24 print("*********** \nDetections at [x=%d,y=%d, w=%d, h=%d]" % obj.rect())25 img.draw_rectangle(obj.rect())26 predictions_list = list(zip(labels,obj.output()))27 28 for i in range(len(predictions_list)): 29 print ("%s = %f" % (predictions_list[i][0], predictions_list[i][1]))30 31 print(clock.fps(), "fps", end="\n")When a person is in the field of view of the camera, you should see the inference result for

person

Microphone

The Portenta Vision Shield features two omnidirectional microphones, based on the MP34DT05 ultra-compact, low-power, and digital MEMS microphone.

Features:

- AOP = 122.5 dB SPL

- 64 dB signal-to-noise ratio

- Omnidirectional sensitivity

- –26 dBFS ± 1 dB sensitivity

FFT Example

You can analyze frequencies present in sounds alongside their harmonic features using this example.

By measuring the sound level on each microphone we can easily know from where the sound is coming, an interesting capability for robotics and AIoT applications.

1import image2import audio3from ulab import numpy as np4from ulab import utils5

6CHANNELS = 27SIZE = 512 // (2 * CHANNELS)8

9raw_buf = None10fb = image.Image(SIZE + 50, SIZE, image.RGB565, copy_to_fb=True)11audio.init(channels=CHANNELS, frequency=16000, gain_db=24, highpass=0.9883)12

13

14def audio_callback(buf):15 # NOTE: do Not call any function that allocates memory.16 global raw_buf17 if raw_buf is None:18 raw_buf = buf19

20

21# Start audio streaming22audio.start_streaming(audio_callback)23

24

25def draw_fft(img, fft_buf):26 fft_buf = (fft_buf / max(fft_buf)) * SIZE27 fft_buf = np.log10(fft_buf + 1) * 2028 color = (222, 241, 84)29 for i in range(0, SIZE):30 img.draw_line(i, SIZE, i, SIZE - int(fft_buf[i]), color, 1)31

32

33def draw_audio_bar(img, level, offset):34 blk_size = SIZE // 1035 color = (214, 238, 240)36 blk_space = blk_size // 437 for i in range(0, int(round(level / 10))):38 fb.draw_rectangle(39 SIZE + offset,40 SIZE - ((i + 1) * blk_size) + blk_space,41 20,42 blk_size - blk_space,43 color,44 1,45 True,46 )47

48

49while True:50 if raw_buf is not None:51 pcm_buf = np.frombuffer(raw_buf, dtype=np.int16)52 raw_buf = None53

54 if CHANNELS == 1:55 fft_buf = utils.spectrogram(pcm_buf)56 l_lvl = int((np.mean(abs(pcm_buf[1::2])) / 32768) * 100)57 else:58 fft_buf = utils.spectrogram(pcm_buf[0::2])59 l_lvl = int((np.mean(abs(pcm_buf[1::2])) / 32768) * 100)60 r_lvl = int((np.mean(abs(pcm_buf[0::2])) / 32768) * 100)61

62 fb.clear()63 draw_fft(fb, fft_buf)64 draw_audio_bar(fb, l_lvl, 0)65 if CHANNELS == 2:66 draw_audio_bar(fb, r_lvl, 25)67 fb.flush()68

69# Stop streaming70audio.stop_streaming()With this script running you will be able to see the Fast Fourier Transform result in the image viewport. Also, the sound level on each microphone channel.

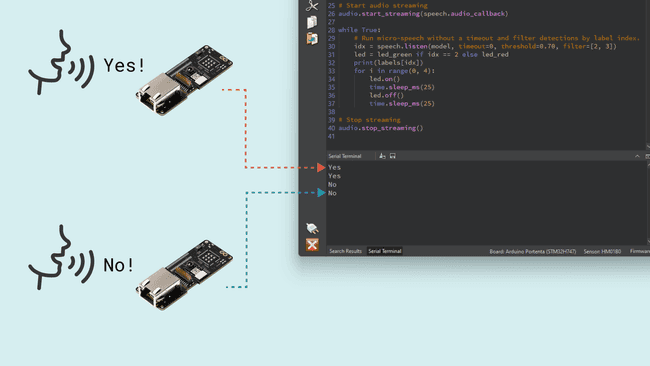

Speech Recognition Example

You can easily implement sound/voice recognition applications using Machine Learning on the edge, this means that the Portenta H7 plus the Vision Shield can run these algorithms locally.

For this example, we are going to test a pre-trained model that can recognize the

yesnoFirst, download the

.tfliteUse the following script to run the example. It can also be found on File > Examples > Audio > micro_speech.py in the OpenMV IDE.

1import audio2import time3import tf4import micro_speech5import pyb6

7labels = ["Silence", "Unknown", "Yes", "No"]8

9led_red = pyb.LED(1)10led_green = pyb.LED(2)11

12model = tf.load("/model.tflite")13speech = micro_speech.MicroSpeech()14audio.init(channels=1, frequency=16000, gain_db=24, highpass=0.9883)15

16# Start audio streaming17audio.start_streaming(speech.audio_callback)18

19while True:20 # Run micro-speech without a timeout and filter detections by label index.21 idx = speech.listen(model, timeout=0, threshold=0.70, filter=[2, 3])22 led = led_green if idx == 2 else led_red23 print(labels[idx])24 for i in range(0, 4):25 led.on()26 time.sleep_ms(25)27 led.off()28 time.sleep_ms(25)29

30# Stop streaming31audio.stop_streaming()Now, just say

yesnoMachine Learning Tool

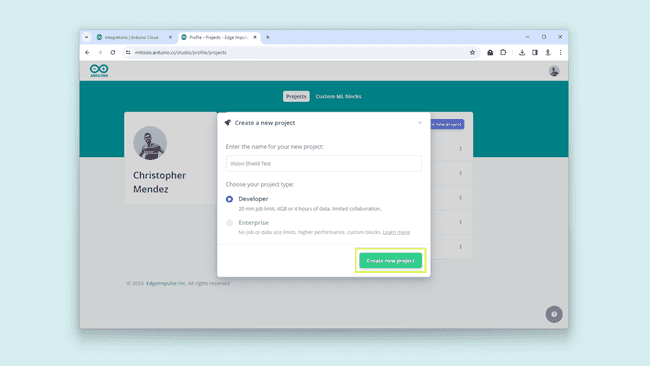

The main features of the Portenta Vision Shield are the audio and video capabilities. This makes it a perfect option for almost infinite machine-learning applications.

Creating this type of application has never been easier thanks to our Machine Learning Tool powered by Edge Impulse®, where we can easily create in a No-Code environment, Audio, Motion, Proximity and Image processing models.

The first step to start creating awesome artificial intelligence and machine learning projects is to create an Arduino Cloud account.

There you will find a dedicated integration called Machine Learning Tools.

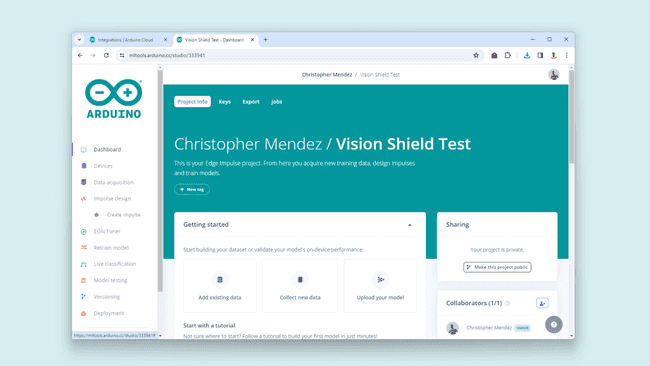

Once in, create a new project and give it a name.

Enter your newly created project and the landing page will look like the following:

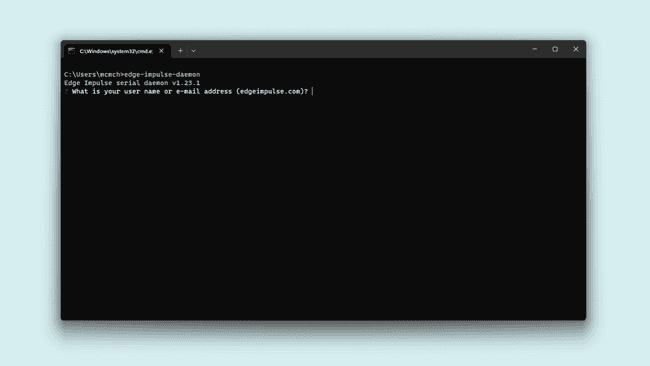

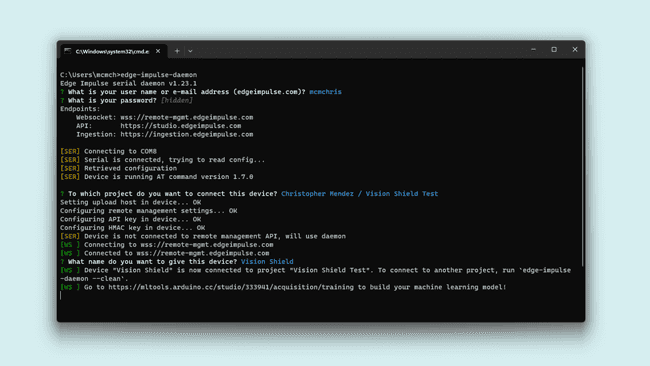

Edge Impulse® Environment Setup

Now, it is time to set up the Edge Impulse® environment on your PC. For this, follow these instructions to install the Edge Impulse CLI.

For Windows users: make sure to install Visual Studio Community and Visual Studio Build Tools.

Download and install the latest Arduino CLI from here. (Video Guide for Windows)

Download the latest Edge Impulse® firmware for the Portenta H7, and unzip the file.

Open the flash script for your operating system (

,flash_windows.bat

orflash_mac.command

) to flash the firmware.flash_linux.shTo test if the Edge Impulse CLI was installed correctly, open the Command Prompt or your favorite terminal and run:

edge-impulse-daemonIf everything goes okay, you should be asked for your Edge Impulse account credentials.

Enter your account username or e-mail address and your password.

Select the project you have created on the Arduino ML Tools, it will be listed.

Give your device a name and wait for it to connect to the platform.

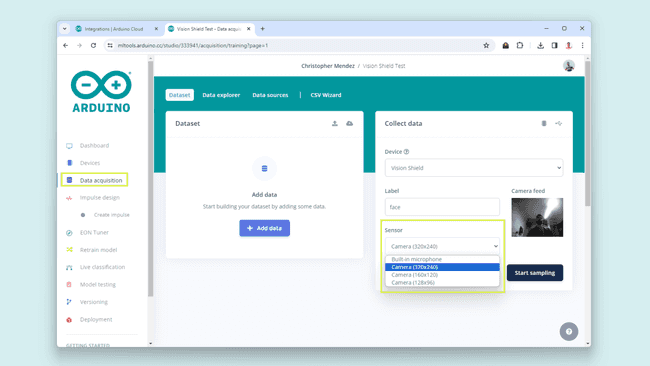

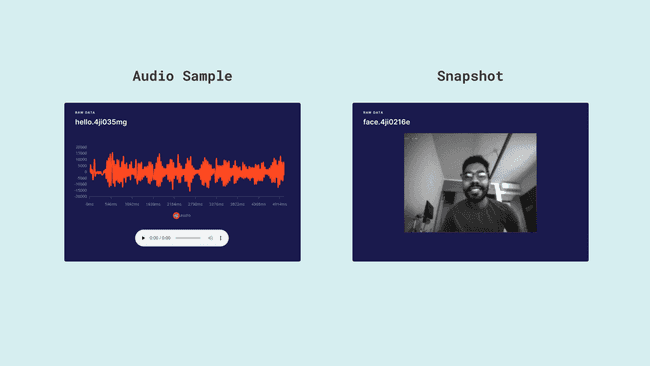

Uploading Sensor Data

The first thing to start developing a machine learning project is to create a dataset for your model. This means, uploading data to your model from the Vision Shield sensors.

To upload data from your Vision Shield on the Machine Learning Tools platform, navigate to Data Acquisition.

In this section, you will be able to select the Vision Shield onboard sensors individually.

This is the supported sensors list:

- Built-in microphone

- Camera (320x240)

- Camera (160x160)

- Camera (128x96)

Now you know how to start with our Machine Learning Tools creating your dataset from scratch, you can get inspired by some of our ML projects listed below:

- Image Classification with Edge Impulse® (Article).

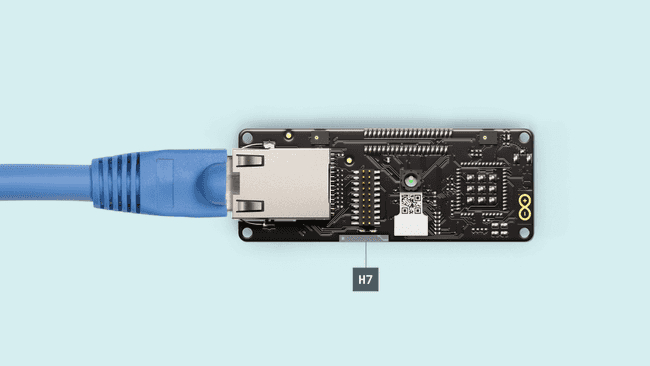

Ethernet (ASX00021)

The Portenta Vision Shield - Ethernet gives you the possibility of connecting your Portenta H7 board to the internet using a wired connection.

First, connect the Vision Shield - Ethernet to the Portenta H7. Now connect the USB-C® cable to the Portenta H7 and your computer. Lastly, connect the Ethernet cable to the Portenta Vision Shield's Ethernet port and your router or modem.

Now you are ready to test the connectivity with the following Python script. This example lets you know if an Ethernet cable is connected successfully to the shield.

1import network2import time3

4lan = network.LAN()5

6# Make sure Eth is not in low-power mode.7lan.config(low_power=False)8

9# Delay for auto negotiation10time.sleep(3.0)11

12while True:13 print("Cable is", "connected." if lan.status() else "disconnected.")14 time.sleep(1.0)If the physical connection is detected, in the OpenMV Serial Monitor, you will see the following message:

Cable is connected.Once the connection is confirmed, we can try to connect to the internet using the example script below.

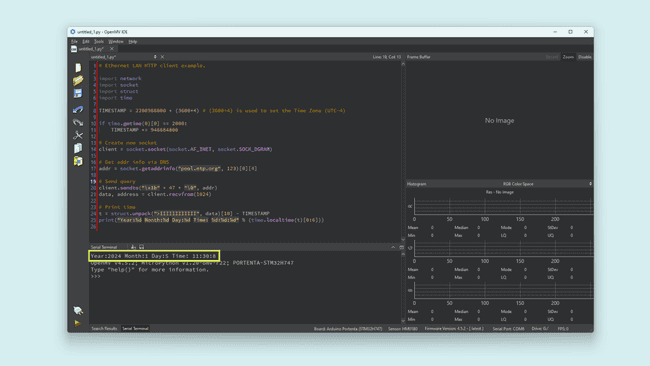

This example lets you gather the current time from an NTP server.

1import network2import socket3import struct4import time5

6TIMESTAMP = 2208988800 + (3600*4) # (3600*4) is used to set the Time Zone (UTC-4)7

8if time.gmtime(0)[0] == 2000:9 TIMESTAMP += 94668480010

11# Create new socket12client = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)13

14# Get addr info via DNS15addr = socket.getaddrinfo("pool.ntp.org", 123)[0][4]16

17# Send query18client.sendto("\x1b" + 47 * "\0", addr)19data, address = client.recvfrom(1024)20

21# Print time22t = struct.unpack(">IIIIIIIIIIII", data)[10] - TIMESTAMP23print("Year:%d Month:%d Day:%d Time: %d:%d:%d" % (time.localtime(t)[0:6]))Run the script and the current date and time will be printed in the OpenMV IDE Serial Monitor.

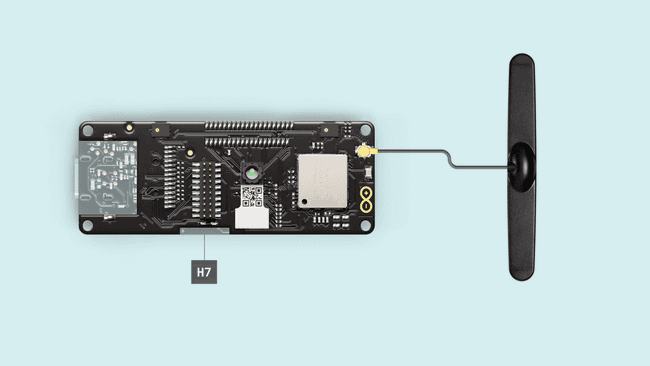

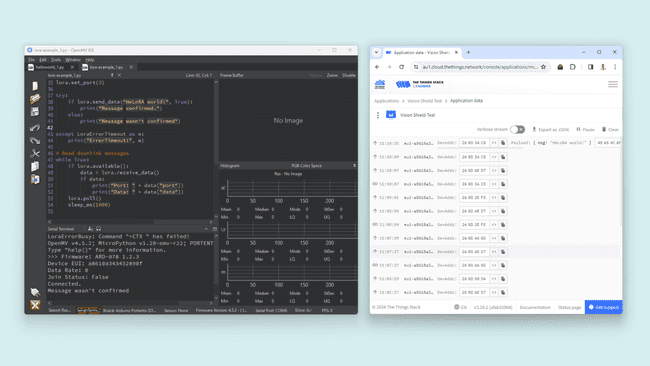

LoRa® (ASX00026)

The Vision Shield - LoRa® can extend our project connectivity by leveraging it LoRa® module for long-range communication in remote areas with a lack of internet access. Powered by the Murata CMWX1ZZABZ module which contains an STM32L0 processor along with a Semtech SX1276 Radio.

To test the LoRa® connectivity, first, connect the Vision Shield - LoRa® to the Portenta H7. Attach the LoRa® antenna to its respective connector. Now connect the USB-C® cable to the Portenta H7 and your computer.

Follow this guide to learn how to set up and create your end device on The Things Network.

The following Python script lets you connect to The Things Network using LoRaWAN® and send a

Hello World1from lora import *2

3lora = Lora(band=BAND_AU915, poll_ms=60000, debug=False)4

5print("Firmware:", lora.get_fw_version())6print("Device EUI:", lora.get_device_eui())7print("Data Rate:", lora.get_datarate())8print("Join Status:", lora.get_join_status())9

10# Example keys for connecting to the backend11appEui = "*****************" # now called JoinEUI12appKey = "*****************************"13

14try:15 lora.join_OTAA(appEui, appKey)16 # Or ABP:17 # lora.join_ABP(devAddr, nwkSKey, appSKey, timeout=5000)18# You can catch individual errors like timeout, rx etc...19except LoraErrorTimeout as e:20 print("Something went wrong; are you indoor? Move near a window and retry")21 print("ErrorTimeout:", e)22except LoraErrorParam as e:23 print("ErrorParam:", e)24

25print("Connected.")26lora.set_port(3)27

28try:29 if lora.send_data("HeLoRA world!", True):30 print("Message confirmed.")31 else:32 print("Message wasn't confirmed")33

34except LoraErrorTimeout as e:35 print("ErrorTimeout:", e)36

37# Read downlink messages38while True:39 if lora.available():40 data = lora.receive_data()41 if data:42 print("Port: " + data["port"])43 print("Data: " + data["data"])44 lora.poll()45 sleep_ms(1000)Find the frequency used in your country for The Things Network on this list and modify the parameter in the script within the following function.

1lora = Lora(band=BAND_AU915, poll_ms=60000, debug=False) # change the band with yours e.g BAND_US915Define your application

appEUIappKey1appEui = "*****************" # now called JoinEUI2appKey = "*****************************"After configuring your credentials and frequency band, you can run the script. You must be in an area with LoRaWAN® coverage, if not, you should receive an alert from the code advising you to move near a window.

You can set up your own LoRaWAN® network using our LoRa® gateways

Support

If you encounter any issues or have questions while working with the Vision Shield, we provide various support resources to help you find answers and solutions.

Help Center

Explore our Help Center, which offers a comprehensive collection of articles and guides for the Vision Shield. The Arduino Help Center is designed to provide in-depth technical assistance and help you make the most of your device.

Forum

Join our community forum to connect with other Portenta Vision Shield users, share your experiences, and ask questions. The forum is an excellent place to learn from others, discuss issues, and discover new ideas and projects related to the Vision Shield.

Contact Us

Please get in touch with our support team if you need personalized assistance or have questions not covered by the help and support resources described before. We're happy to help you with any issues or inquiries about the Vision Shield.

Suggested changes

The content on docs.arduino.cc is facilitated through a public GitHub repository. You can read more on how to contribute in the contribution policy.

License

The Arduino documentation is licensed under the Creative Commons Attribution-Share Alike 4.0 license.